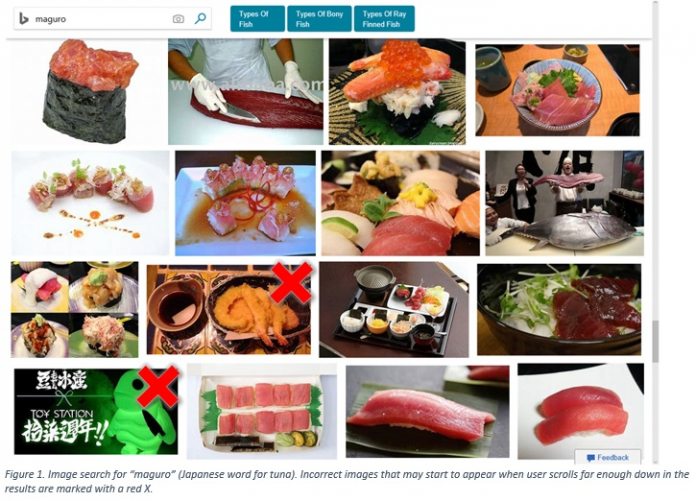

The Bing team will also present the findings at the Computer Vision and Pattern Recognition Conference (CVPR) in Salt Lake City. “Getting enough high-quality training data is often the most challenging piece of building an AI-based service,” the researchers wrote. “Typically, data labeled by humans is of high quality (has relatively few mistakes) but comes at high cost — both in terms of money and time. On the other hand, automatic approaches allow for cheaper data generation in large quantities but result in more labeling errors (‘label noise’).” Under normal circumstances, training algorithms is a thankless and time-consuming task. For machine learning purposes, researchers must use potentially millions of data samples and sort them. Indeed, this is usually performance manually, albeit with shortcuts. One such shortcut is called data scraping, using search engines to find results from each item on a list of categories. While this method is widely used, but it is also problematic. That’s because not all results are relevant to the category. In turn, an inaccuracy in the training can lead to inaccuracies within the machine learning model.

Solution

The Bing team has taken a big step to solving the problem. By developing a specific AI model, the researchers were able to root out errors in real-time: “The crux of the solution is to teach the AI model what a typical error looks like for a few example categories (such as ‘Donuts’, ‘Onion Soup’, ‘Waffle’ in the picture below) and then use techniques such as ‘transfer learning’ and ‘domain adaptation’ to get the model to intelligently use those ‘learnings’ in all the other categories where human labeled examples are not available (‘Hamburger’).” Through the new model, the machine learning model can remember some of the inaccurate search-scraped results. Microsoft points out the new system works “very well” and is functioning reliably to find which category labels are correct or incorrect. It is worth pointing out the AI can achieve this even when no human verified labels are present.